Stemmatics

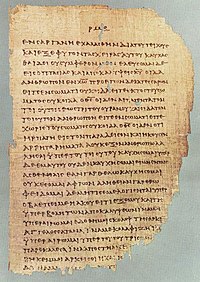

Textual criticism is a branch of textual scholarship, philology, and literary criticism that is concerned with the identification of textual variants, or different versions, of either manuscripts (mss) or of printed books. Such texts may range in dates from the earliest writing in cuneiform, impressed on clay, for example, to multiple unpublished versions of a 21st-century author's work. Historically, scribes who were paid to copy documents may have been literate, but many were simply copyists, mimicking the shapes of letters without necessarily understanding what they meant. This means that unintentional alterations were common when copying manuscripts by hand. Intentional alterations may have been made as well, for example, the censoring of printed work for political, religious or cultural reasons.

The objective of the textual critic's work is to provide a better understanding of the creation and historical transmission of the text and its variants. This understanding may lead to the production of a critical edition containing a scholarly curated text. If a scholar has several versions of a manuscript but no known original, then established methods of textual criticism can be used to seek to reconstruct the original text as closely as possible. The same methods can be used to reconstruct intermediate versions, or recensions, of a document's transcription history, depending on the number and quality of the text available.

On the other hand, the one original text that a scholar theorizes to exist is referred to as the urtext (in the context of Biblical studies), archetype or autograph; however, there is not necessarily a single original text for every group of texts. For example, if a story was spread by oral tradition, and then later written down by different people in different locations, the versions can vary greatly.

There are many approaches or methods to the practice of textual criticism, notably eclecticism, stemmatics, and copy-text editing. Quantitative techniques are also used to determine the relationships between witnesses to a text, with methods from evolutionary biology (phylogenetics) appearing to be effective on a range of traditions.

In some domains, such as religious and classical text editing, the phrase "lower criticism" refers to textual criticism and "higher criticism" to the endeavor to establish the authorship, date, and place of composition of the original text.

History

Textual criticism has been practiced for over two thousand years, as one of the philological arts. Early textual critics, especially the librarians of Hellenistic Alexandria in the last two centuries BC, were concerned with preserving the works of antiquity, and this continued through the Middle Ages into the early modern period and the invention of the printing press. Textual criticism was an important aspect of the work of many Renaissance humanists, such as Desiderius Erasmus, who edited the Greek New Testament, creating the Textus Receptus. In Italy, scholars such as Petrarch and Poggio Bracciolini collected and edited many Latin manuscripts, while a new spirit of critical enquiry was boosted by the attention to textual states, for example in the work of Lorenzo Valla on the purported Donation of Constantine.

Many ancient works, such as the Bible and the Greek tragedies, survive in hundreds of copies, and the relationship of each copy to the original may be unclear. Textual scholars have debated for centuries which sources are most closely derived from the original, hence which readings in those sources are correct. Although texts such as Greek plays presumably had one original, the question of whether some biblical books, like the Gospels, ever had just one original has been discussed. Interest in applying textual criticism to the Quran has also developed after the discovery of the Sana'a manuscripts in 1972, which possibly date back to the seventh to eighth centuries.

In the English language, the works of William Shakespeare have been a particularly fertile ground for textual criticism—both because the texts, as transmitted, contain a considerable amount of variation, and because the effort and expense of producing superior editions of his works have always been widely viewed as worthwhile. The principles of textual criticism, although originally developed and refined for works of antiquity and the Bible, and, for Anglo-American Copy-Text editing, Shakespeare, have been applied to many works, from (near-)contemporary texts to the earliest known written documents. Ranging from ancient Mesopotamia and Egypt to the twentieth century, textual criticism covers a period of about five millennia.

Basic notions and objectives

The basic problem, as described by Paul Maas, is as follows:

We have no autograph [handwritten by the original author] manuscripts of the Greek and Roman classical writers and no copies which have been collated with the originals; the manuscripts we possess derive from the originals through an unknown number of intermediate copies, and are consequently of questionable trustworthiness. The business of textual criticism is to produce a text as close as possible to the original (constitutio textus).

Maas comments further that "A dictation revised by the author must be regarded as equivalent to an autograph manuscript". The lack of autograph manuscripts applies to many cultures other than Greek and Roman. In such a situation, a key objective becomes the identification of the first exemplar before any split in the tradition. That exemplar is known as the archetype. "If we succeed in establishing the text of [the archetype], the constitutio (reconstruction of the original) is considerably advanced."

The textual critic's ultimate objective is the production of a "critical edition". This contains the text that the author has determined most closely approximates the original, and is accompanied by an apparatus criticus or critical apparatus. The critical apparatus presents the author's work in three parts: first, a list or description of the evidence that the editor used (names of manuscripts, or abbreviations called sigla); second, the editor's analysis of that evidence (sometimes a simple likelihood rating),; and third, a record of rejected variants of the text (often in order of preference).

Process

Before inexpensive mechanical printing, literature was copied by hand, and many variations were introduced by copyists. The age of printing made the scribal profession effectively redundant. Printed editions, while less susceptible to the proliferation of variations likely to arise during manual transmission, are nonetheless not immune to introducing variations from an author's autograph. Instead of a scribe miscopying his source, a compositor or a printing shop may read or typeset a work in a way that differs from the autograph. Since each scribe or printer commits different errors, reconstruction of the lost original is often aided by a selection of readings taken from many sources. An edited text that draws from multiple sources is said to be eclectic. In contrast to this approach, some textual critics prefer to identify the single best surviving text, and not to combine readings from multiple sources.

When comparing different documents, or "witnesses", of a single, original text, the observed differences are called variant readings, or simply variants or readings. It is not always apparent which single variant represents the author's original work. The process of textual criticism seeks to explain how each variant may have entered the text, either by accident (duplication or omission) or intention (harmonization or censorship), as scribes or supervisors transmitted the original author's text by copying it. The textual critic's task, therefore, is to sort through the variants, eliminating those most likely to be un-original, hence establishing a critical text, or critical edition, that is intended to best approximate the original. At the same time, the critical text should document variant readings, so the relation of extant witnesses to the reconstructed original is apparent to a reader of the critical edition. In establishing the critical text, the textual critic considers both "external" evidence (the age, provenance, and affiliation of each witness) and "internal" or "physical" considerations (what the author and scribes, or printers, were likely to have done).

The collation of all known variants of a text is referred to as a variorum, namely a work of textual criticism whereby all variations and emendations are set side by side so that a reader can track how textual decisions have been made in the preparation of a text for publication. The Bible and the works of William Shakespeare have often been the subjects of variorum editions, although the same techniques have been applied with less frequency to many other works, such as Walt Whitman's Leaves of Grass, and the prose writings of Edward Fitzgerald.

In practice, citation of manuscript evidence implies any of several methodologies. The ideal, but most costly, method is physical inspection of the manuscript itself; alternatively, published photographs or facsimile editions may be inspected. This method involves paleographical analysis—interpretation of handwriting, incomplete letters and even reconstruction of lacunae. More typically, editions of manuscripts are consulted, which have done this paleographical work already.

Eclecticism

Eclecticism refers to the practice of consulting a wide diversity of witnesses to a particular original. The practice is based on the principle that the more independent transmission histories there are, the less likely they will be to reproduce the same errors. What one omits, the others may retain; what one adds, the others are unlikely to add. Eclecticism allows inferences to be drawn regarding the original text, based on the evidence of contrasts between witnesses.

Eclectic readings also normally give an impression of the number of witnesses to each available reading. Although a reading supported by the majority of witnesses is frequently preferred, this does not follow automatically. For example, a second edition of a Shakespeare play may include an addition alluding to an event known to have happened between the two editions. Although nearly all subsequent manuscripts may have included the addition, textual critics may reconstruct the original without the addition.

The result of the process is a text with readings drawn from many witnesses. It is not a copy of any particular manuscript, and may deviate from the majority of existing manuscripts. In a purely eclectic approach, no single witness is theoretically favored. Instead, the critic forms opinions about individual witnesses, relying on both external and internal evidence.

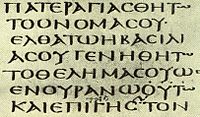

Since the mid-19th century, eclecticism, in which there is no a priori bias to a single manuscript, has been the dominant method of editing the Greek text of the New Testament (currently, the United Bible Society, 5th ed. and Nestle-Åland, 28th ed.). Even so, the oldest manuscripts, being of the Alexandrian text-type, are the most favored, and the critical text has an Alexandrian disposition.

External evidence

External evidence is evidence of each physical witness, its date, source, and relationship to other known witnesses. Critics will often prefer the readings supported by the oldest witnesses. Since errors tend to accumulate, older manuscripts should have fewer errors. Readings supported by a majority of witnesses are also usually preferred, since these are less likely to reflect accidents or individual biases. For the same reasons, the most geographically diverse witnesses are preferred. Some manuscripts show evidence that particular care was taken in their composition, for example, by including alternative readings in their margins, demonstrating that more than one prior copy (exemplar) was consulted in producing the current one. Other factors being equal, these are the best witnesses. The role of the textual critic is necessary when these basic criteria are in conflict. For instance, there will typically be fewer early copies, and a larger number of later copies. The textual critic will attempt to balance these criteria, to determine the original text.

There are many other more sophisticated considerations. For example, readings that depart from the known practice of a scribe or a given period may be deemed more reliable, since a scribe is unlikely on his own initiative to have departed from the usual practice.

Internal evidence

Internal evidence is evidence that comes from the text itself, independent of the physical characteristics of the document. Various considerations can be used to decide which reading is the most likely to be original. Sometimes these considerations can be in conflict.

Two common considerations have the Latin names lectio brevior (shorter reading) and lectio difficilior (more difficult reading). The first is the general observation that scribes tended to add words, for clarification or out of habit, more often than they removed them. The second, lectio difficilior potior (the harder reading is stronger), recognizes the tendency for harmonization—resolving apparent inconsistencies in the text. Applying this principle leads to taking the more difficult (unharmonized) reading as being more likely to be the original. Such cases also include scribes simplifying and smoothing texts they did not fully understand.

Another scribal tendency is called homoioteleuton, meaning "similar endings". Homoioteleuton occurs when two words/phrases/lines end with the similar sequence of letters. The scribe, having finished copying the first, skips to the second, omitting all intervening words. Homoioarche refers to eye-skip when the beginnings of two lines are similar.

The critic may also examine the other writings of the author to decide what words and grammatical constructions match his style. The evaluation of internal evidence also provides the critic with information that helps him evaluate the reliability of individual manuscripts. Thus, the consideration of internal and external evidence is related.

After considering all relevant factors, the textual critic seeks the reading that best explains how the other readings would arise. That reading is then the most likely candidate to have been original.

Canons of textual criticism

Various scholars have developed guidelines, or canons of textual criticism, to guide the exercise of the critic's judgment in determining the best readings of a text. One of the earliest was Johann Albrecht Bengel (1687–1752), who in 1734 produced an edition of the Greek New Testament. In his commentary, he established the rule Proclivi scriptioni praestat ardua, ("the harder reading is to be preferred").

Johann Jakob Griesbach (1745–1812) published several editions of the New Testament. In his 1796 edition, he established fifteen critical rules. Among them was a variant of Bengel's rule, Lectio difficilior potior, "the harder reading is better." Another was Lectio brevior praeferenda, "the shorter reading is better", based on the idea that scribes were more likely to add than to delete. This rule cannot be applied uncritically, as scribes may omit material inadvertently.

Brooke Foss Westcott (1825–1901) and Fenton Hort (1828–1892) published an edition of the New Testament in Greek in 1881. They proposed nine critical rules, including a version of Bengel's rule, "The reading is less likely to be original that shows a disposition to smooth away difficulties." They also argued that "Readings are approved or rejected by reason of the quality, and not the number, of their supporting witnesses", and that "The reading is to be preferred that most fitly explains the existence of the others."

Many of these rules, although originally developed for biblical textual criticism, have wide applicability to any text susceptible to errors of transmission.

Limitations of eclecticism

Since the canons of criticism are highly susceptible to interpretation, and at times even contradict each other, they may be employed to justify a result that fits the textual critic's aesthetic or theological agenda. Starting in the 19th century, scholars sought more rigorous methods to guide editorial judgment. Stemmatics and copy-text editing – while both eclectic, in that they permit the editor to select readings from multiple sources – sought to reduce subjectivity by establishing one or a few witnesses presumably as being favored by "objective" criteria. The citing of sources used, and alternate readings, and the use of original text and images helps readers and other critics determine to an extent the depth of research of the critic, and to independently verify their work.

Stemmatics

Overview

Stemmatics or stemmatology is a rigorous approach to textual criticism. Karl Lachmann (1793–1851) greatly contributed to making this method famous, even though he did not invent it. The method takes its name from the word stemma. The Ancient Greek word στέμματα and its loanword in classical Latin stemmata may refer to "family trees". This specific meaning shows the relationships of the surviving witnesses (the first known example of such a stemma, albeit without the name, dates from 1827). The family tree is also referred to as a cladogram. The method works from the principle that "community of error implies community of origin". That is, if two witnesses have a number of errors in common, it may be presumed that they were derived from a common intermediate source, called a hyparchetype. Relations between the lost intermediates are determined by the same process, placing all extant manuscripts in a family tree or stemma codicum descended from a single archetype. The process of constructing the stemma is called recension, or the Latin recensio.

Having completed the stemma, the critic proceeds to the next step, called selection or selectio, where the text of the archetype is determined by examining variants from the closest hyparchetypes to the archetype and selecting the best ones. If one reading occurs more often than another at the same level of the tree, then the dominant reading is selected. If two competing readings occur equally often, then the editor uses judgment to select the correct reading.

After selectio, the text may still contain errors, since there may be passages where no source preserves the correct reading. The step of examination, or examinatio is applied to find corruptions. Where the editor concludes that the text is corrupt, it is corrected by a process called "emendation", or emendatio (also sometimes called divinatio). Emendations not supported by any known source are sometimes called conjectural emendations.

The process of selectio resembles eclectic textual criticism, but applied to a restricted set of hypothetical hyparchetypes. The steps of examinatio and emendatio resemble copy-text editing. In fact, the other techniques can be seen as special cases of stemmatics in which a rigorous family history of the text cannot be determined but only approximated. If it seems that one manuscript is by far the best text, then copy text editing is appropriate, and if it seems that a group of manuscripts are good, then eclecticism on that group would be proper.

The Hodges–Farstad edition of the Greek New Testament attempts to use stemmatics for some portions.

Phylogenetics

Phylogenetics is a technique borrowed from biology, where it was originally named phylogenetic systematics by Willi Hennig. In biology, the technique is used to determine the evolutionary relationships between different species. In its application in textual criticism, the text of a number of different witnesses may be entered into a computer, which records all the differences between them, or derived from an existing apparatus. The manuscripts are then grouped according to their shared characteristics. The difference between phylogenetics and more traditional forms of statistical analysis is that, rather than simply arranging the manuscripts into rough groupings according to their overall similarity, phylogenetics assumes that they are part of a branching family tree and uses that assumption to derive relationships between them. This makes it more like an automated approach to stemmatics. However, where there is a difference, the computer does not attempt to decide which reading is closer to the original text, and so does not indicate which branch of the tree is the "root"—which manuscript tradition is closest to the original. Other types of evidence must be used for that purpose.

Phylogenetics faces the same difficulty as textual criticism: the appearance of characteristics in descendants of an ancestor other than by direct copying (or miscopying) of the ancestor, for example where a scribe combines readings from two or more different manuscripts ("contamination"). The same phenomenon is widely present among living organisms, as instances of horizontal gene transfer (or lateral gene transfer) and genetic recombination, particularly among bacteria. Further exploration of the applicability of the different methods for coping with these problems across both living organisms and textual traditions is a promising area of study.

Software developed for use in biology has been applied successfully to textual criticism; for example, it is being used by the Canterbury Tales Project to determine the relationship between the 84 surviving manuscripts and four early printed editions of The Canterbury Tales. Shaw's edition of Dante's Commedia uses phylogenetic and traditional methods alongside each other in a comprehensive exploration of relations among seven early witnesses to Dante's text.

Limitations and criticism

The stemmatic method assumes that each witness is derived from one, and only one, predecessor. If a scribe refers to more than one source when creating her or his copy, then the new copy will not clearly fall into a single branch of the family tree. In the stemmatic method, a manuscript that is derived from more than one source is said to be contaminated.

The method also assumes that scribes only make new errors—they do not attempt to correct the errors of their predecessors. When a text has been improved by the scribe, it is said to be sophisticated, but "sophistication" impairs the method by obscuring a document's relationship to other witnesses, and making it more difficult to place the manuscript correctly in the stemma.

The stemmatic method requires the textual critic to group manuscripts by commonality of error. It is required, therefore, that the critic can distinguish erroneous readings from correct ones. This assumption has often come under attack. W. W. Greg noted: "That if a scribe makes a mistake he will inevitably produce nonsense is the tacit and wholly unwarranted assumption."

Franz Anton Knittel defended the traditional point of view in theology and was against the modern textual criticism. He defended an authenticity of the Pericopa Adulterae (John 7:53–8:11), Comma Johanneum (1 John 5:7), and Testimonium Flavianum. According to him, Erasmus in his Novum Instrumentum omne did not incorporate the Comma from Codex Montfortianus, because of grammar differences, but used Complutensian Polyglotta. According to him, the Comma was known for Tertullian.

The stemmatic method's final step is emendatio, also sometimes referred to as "conjectural emendation". But, in fact, the critic employs conjecture at every step of the process. Some of the method's rules that are designed to reduce the exercise of editorial judgment do not necessarily produce the correct result. For example, where there are more than two witnesses at the same level of the tree, normally the critic will select the dominant reading. However, it may be no more than fortuitous that more witnesses have survived that present a particular reading. A plausible reading that occurs less often may, nevertheless, be the correct one.

Lastly, the stemmatic method assumes that every extant witness is derived, however remotely, from a single source. It does not account for the possibility that the original author may have revised her or his work, and that the text could have existed at different times in more than one authoritative version.

Best-text editing

The critic Joseph Bédier (1864–1938), who had worked with stemmatics, launched an attack on that method in 1928. He surveyed editions of medieval French texts that were produced with the stemmatic method, and found that textual critics tended overwhelmingly to produce bifid trees, divided into just two branches. He concluded that this outcome was unlikely to have occurred by chance, and that therefore, the method was tending to produce bipartite stemmas regardless of the actual history of the witnesses. He suspected that editors tended to favor trees with two branches, as this would maximize the opportunities for editorial judgment (as there would be no third branch to "break the tie" whenever the witnesses disagreed). He also noted that, for many works, more than one reasonable stemma could be postulated, suggesting that the method was not as rigorous or as scientific as its proponents had claimed.

Bédier's doubts about the stemmatic method led him to consider whether it could be dropped altogether. As an alternative to stemmatics, Bédier proposed a Best-text editing method, in which a single textual witness, judged to be of a 'good' textual state by the editor, is emended as lightly as possible for manifest transmission mistakes, but left otherwise unchanged. This makes a Best-text edition essentially a documentary edition. For an example one may refer to Eugene Vinaver's edition of the Winchester Manuscript of Malory's Le Morte d'Arthur.

Copy-text editing

When copy-text editing, the scholar fixes errors in a base text, often with the help of other witnesses. Often, the base text is selected from the oldest manuscript of the text, but in the early days of printing, the copy text was often a manuscript that was at hand.

Using the copy-text method, the critic examines the base text and makes corrections (called emendations) in places where the base text appears wrong to the critic. This can be done by looking for places in the base text that do not make sense or by looking at the text of other witnesses for a superior reading. Close-call decisions are usually resolved in favor of the copy-text.

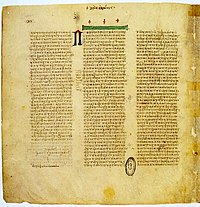

The first published, printed edition of the Greek New Testament was produced by this method. Erasmus, the editor, selected a manuscript from the local Dominican monastery in Basle and corrected its obvious errors by consulting other local manuscripts. The Westcott and Hort text, which was the basis for the Revised Version of the English bible, also used the copy-text method, using the Codex Vaticanus as the base manuscript.

McKerrow's concept of copy-text

The bibliographer Ronald B. McKerrow introduced the term copy-text in his 1904 edition of the works of Thomas Nashe, defining it as "the text used in each particular case as the basis of mine". McKerrow was aware of the limitations of the stemmatic method, and believed it was more prudent to choose one particular text that was thought to be particularly reliable, and then to emend it only where the text was obviously corrupt. The French critic Joseph Bédier likewise became disenchanted with the stemmatic method, and concluded that the editor should choose the best available text, and emend it as little as possible.

In McKerrow's method as originally introduced, the copy-text was not necessarily the earliest text. In some cases, McKerrow would choose a later witness, noting that "if an editor has reason to suppose that a certain text embodies later corrections than any other, and at the same time has no ground for disbelieving that these corrections, or some of them at least, are the work of the author, he has no choice but to make that text the basis of his reprint".

By 1939, in his Prolegomena for the Oxford Shakespeare, McKerrow had changed his mind about this approach, as he feared that a later edition—even if it contained authorial corrections—would "deviate more widely than the earliest print from the author's original manuscript". He therefore concluded that the correct procedure would be "produced by using the earliest 'good' print as copy-text and inserting into it, from the first edition which contains them, such corrections as appear to us to be derived from the author". But, fearing the arbitrary exercise of editorial judgment, McKerrow stated that, having concluded that a later edition had substantive revisions attributable to the author, "we must accept all the alterations of that edition, saving any which seem obvious blunders or misprints".

W. W. Greg's rationale of copy-text

Anglo-American textual criticism in the last half of the 20th century came to be dominated by a landmark 1950 essay by Sir Walter W. Greg, "The Rationale of Copy-Text". Greg proposed:

[A] distinction between the significant, or as I shall call them 'substantive', readings of the text, those namely that affect the author's meaning or the essence of his expression, and others, such in general as spelling, punctuation, word-division, and the like, affecting mainly its formal presentation, which may be regarded as the accidents, or as I shall call them 'accidentals', of the text.

Greg observed that compositors at printing shops tended to follow the "substantive" readings of their copy faithfully, except when they deviated unintentionally; but that "as regards accidentals they will normally follow their own habits or inclination, though they may, for various reasons and to varying degrees, be influenced by their copy".

He concluded:

The true theory is, I contend, that the copy-text should govern (generally) in the matter of accidentals, but that the choice between substantive readings belongs to the general theory of textual criticism and lies altogether beyond the narrow principle of the copy-text. Thus it may happen that in a critical edition the text rightly chosen as copy may not by any means be the one that supplies most substantive readings in cases of variation. The failure to make this distinction and to apply this principle has naturally led to too close and too general a reliance upon the text chosen as basis for an edition, and there has arisen what may be called the tyranny of the copy-text, a tyranny that has, in my opinion, vitiated much of the best editorial work of the past generation.

Greg's view, in short, was that the "copy-text can be allowed no over-riding or even preponderant authority so far as substantive readings are concerned". The choice between reasonable competing readings, he said:

[W]ill be determined partly by the opinion the editor may form respecting the nature of the copy from which each substantive edition was printed, which is a matter of external authority; partly by the intrinsic authority of the several texts as judged by the relative frequency of manifest errors therein; and partly by the editor's judgment of the intrinsic claims of individual readings to originality—in other words their intrinsic merit, so long as by 'merit' we mean the likelihood of their being what the author wrote rather than their appeal to the individual taste of the editor.

Although Greg argued that editors should be free to use their judgment to choose between competing substantive readings, he suggested that an editor should defer to the copy-text when "the claims of two readings ... appear to be exactly balanced. ... In such a case, while there can be no logical reason for giving preference to the copy-text, in practice, if there is no reason for altering its reading, the obvious thing seems to be to let it stand." The "exactly balanced" variants are said to be indifferent.

Editors who follow Greg's rationale produce eclectic editions, in that the authority for the "accidentals" is derived from one particular source (usually the earliest one) that the editor considers to be authoritative, but the authority for the "substantives" is determined in each individual case according to the editor's judgment. The resulting text, except for the accidentals, is constructed without relying predominantly on any one witness.

Greg–Bowers–Tanselle

W. W. Greg did not live long enough to apply his rationale of copy-text to any actual editions of works. His rationale was adopted and significantly expanded by Fredson Bowers (1905–1991). Starting in the 1970s, G. Thomas Tanselle vigorously took up the method's defense and added significant contributions of his own. Greg's rationale as practiced by Bowers and Tanselle has come to be known as the "Greg–Bowers" or the "Greg–Bowers–Tanselle" method.

Application to works of all periods

In his 1964 essay, "Some Principles for Scholarly Editions of Nineteenth-Century American Authors", Bowers said that "the theory of copy-text proposed by Sir Walter Greg rules supreme". Bowers's assertion of "supremacy" was in contrast to Greg's more modest claim that "My desire is rather to provoke discussion than to lay down the law".

Whereas Greg had limited his illustrative examples to English Renaissance drama, where his expertise lay, Bowers argued that the rationale was "the most workable editorial principle yet contrived to produce a critical text that is authoritative in the maximum of its details whether the author be Shakespeare, Dryden, Fielding, Nathaniel Hawthorne, or Stephen Crane. The principle is sound without regard for the literary period." For works where an author's manuscript survived—a case Greg had not considered—Bowers concluded that the manuscript should generally serve as copy-text. Citing the example of Nathaniel Hawthorne, he noted:

When an author's manuscript is preserved, this has paramount authority, of course. Yet the fallacy is still maintained that since the first edition was proofread by the author, it must represent his final intentions and hence should be chosen as copy-text. Practical experience shows the contrary. When one collates the manuscript of The House of the Seven Gables against the first printed edition, one finds an average of ten to fifteen differences per page between the manuscript and the print, many of them consistent alterations from the manuscript system of punctuation, capitalization, spelling, and word-division. It would be ridiculous to argue that Hawthorne made approximately three to four thousand small changes in proof, and then wrote the manuscript of The Blithedale Romance according to the same system as the manuscript of the Seven Gables, a system that he had rejected in proof.

Following Greg, the editor would then replace any of the manuscript readings with substantives from printed editions that could be reliably attributed to the author: "Obviously, an editor cannot simply reprint the manuscript, and he must substitute for its readings any words that he believes Hawthorne changed in proof."

Uninfluenced final authorial intention

McKerrow had articulated textual criticism's goal in terms of "our ideal of an author's fair copy of his work in its final state". Bowers asserted that editions founded on Greg's method would "represent the nearest approximation in every respect of the author's final intentions." Bowers stated similarly that the editor's task is to "approximate as nearly as possible an inferential authorial fair copy." Tanselle notes that, "Textual criticism ... has generally been undertaken with a view to reconstructing, as accurately as possible, the text finally intended by the author".

Bowers and Tanselle argue for rejecting textual variants that an author inserted at the suggestion of others. Bowers said that his edition of Stephen Crane's first novel, Maggie, presented "the author's final and uninfluenced artistic intentions." In his writings, Tanselle refers to "unconstrained authorial intention" or "an author's uninfluenced intentions." This marks a departure from Greg, who had merely suggested that the editor inquire whether a later reading "is one that the author can reasonably be supposed to have substituted for the former", not implying any further inquiry as to why the author had made the change.

Tanselle discusses the example of Herman Melville's Typee. After the novel's initial publication, Melville's publisher asked him to soften the novel's criticisms of missionaries in the South Seas. Although Melville pronounced the changes an improvement, Tanselle rejected them in his edition, concluding that "there is no evidence, internal or external, to suggest that they are the kinds of changes Melville would have made without pressure from someone else."

Bowers confronted a similar problem in his edition of Maggie. Crane originally printed the novel privately in 1893. To secure commercial publication in 1896, Crane agreed to remove profanity, but he also made stylistic revisions. Bowers's approach was to preserve the stylistic and literary changes of 1896, but to revert to the 1893 readings where he believed that Crane was fulfilling the publisher's intention rather than his own. There were, however, intermediate cases that could reasonably have been attributed to either intention, and some of Bowers's choices came under fire—both as to his judgment, and as to the wisdom of conflating readings from the two different versions of Maggie.

Hans Zeller argued that it is impossible to tease apart the changes Crane made for literary reasons and those made at the publisher's insistence:

Firstly, in anticipation of the character of the expected censorship, Crane could be led to undertake alterations which also had literary value in the context of the new version. Secondly, because of the systematic character of the work, purely censorial alterations sparked off further alterations, determined at this stage by literary considerations. Again in consequence of the systemic character of the work, the contamination of the two historical versions in the edited text gives rise to a third version. Though the editor may indeed give a rational account of his decision at each point on the basis of the documents, nevertheless to aim to produce the ideal text which Crane would have produced in 1896 if the publisher had left him complete freedom is to my mind just as unhistorical as the question of how the first World War or the history of the United States would have developed if Germany had not caused the USA to enter the war in 1917 by unlimited submarine combat. The nonspecific form of censorship described above is one of the historical conditions under which Crane wrote the second version of Maggie and made it function. From the text which arose in this way it is not possible to subtract these forces and influences, in order to obtain a text of the author's own. Indeed I regard the "uninfluenced artistic intentions" of the author as something which exists only in terms of aesthetic abstraction. Between influences on the author and influences on the text are all manner of transitions.

Bowers and Tanselle recognize that texts often exist in more than one authoritative version. Tanselle argues that:

[T]wo types of revision must be distinguished: that which aims at altering the purpose, direction, or character of a work, thus attempting to make a different sort of work out of it; and that which aims at intensifying, refining, or improving the work as then conceived (whether or not it succeeds in doing so), thus altering the work in degree but not in kind. If one may think of a work in terms of a spatial metaphor, the first might be labeled "vertical revision," because it moves the work to a different plane, and the second "horizontal revision," because it involves alterations within the same plane. Both produce local changes in active intention; but revisions of the first type appear to be in fulfillment of an altered programmatic intention or to reflect an altered active intention in the work as a whole, whereas those of the second do not.

He suggests that where a revision is "horizontal" (i.e., aimed at improving the work as originally conceived), then the editor should adopt the author's later version. But where a revision is "vertical" (i.e., fundamentally altering the work's intention as a whole), then the revision should be treated as a new work, and edited separately on its own terms.

Format for apparatus

Bowers was also influential in defining the form of critical apparatus that should accompany a scholarly edition. In addition to the content of the apparatus, Bowers led a movement to relegate editorial matter to appendices, leaving the critically established text "in the clear", that is, free of any signs of editorial intervention. Tanselle explained the rationale for this approach:

In the first place, an editor's primary responsibility is to establish a text; whether his goal is to reconstruct that form of the text which represents the author's final intention or some other form of the text, his essential task is to produce a reliable text according to some set of principles. Relegating all editorial matter to an appendix and allowing the text to stand by itself serves to emphasize the primacy of the text and permits the reader to confront the literary work without the distraction of editorial comment and to read the work with ease. A second advantage of a clear text is that it is easier to quote from or to reprint. Although no device can insure accuracy of quotation, the insertion of symbols (or even footnote numbers) into a text places additional difficulties in the way of the quoter. Furthermore, most quotations appear in contexts where symbols are inappropriate; thus when it is necessary to quote from a text which has not been kept clear of apparatus, the burden of producing a clear text of the passage is placed on the quoter. Even footnotes at the bottom of the text pages are open to the same objection, when the question of a photographic reprint arises.

Some critics believe that a clear-text edition gives the edited text too great a prominence, relegating textual variants to appendices that are difficult to use, and suggesting a greater sense of certainty about the established text than it deserves. As Shillingsburg notes, "English scholarly editions have tended to use notes at the foot of the text page, indicating, tacitly, a greater modesty about the "established" text and drawing attention more forcibly to at least some of the alternative forms of the text".

The MLA's CEAA and CSE

In 1963, the Modern Language Association of America (MLA) established the Center for Editions of American Authors (CEAA). The CEAA's Statement of Editorial Principles and Procedures, first published in 1967, adopted the Greg–Bowers rationale in full. A CEAA examiner would inspect each edition, and only those meeting the requirements would receive a seal denoting "An Approved Text."

Between 1966 and 1975, the Center allocated more than $1.5 million in funding from the National Endowment for the Humanities to various scholarly editing projects, which were required to follow the guidelines (including the structure of editorial apparatus) as Bowers had defined them. According to Davis, the funds coordinated by the CEAA over the same period were more than $6 million, counting funding from universities, university presses, and other bodies.

The Center for Scholarly Editions (CSE) replaced the CEAA in 1976. The change of name indicated the shift to a broader agenda than just American authors. The Center also ceased its role in the allocation of funds. The Center's latest guidelines (2003) no longer prescribe a particular editorial procedure.

Application to religious documents

Book of Mormon

The Church of Jesus Christ of Latter-day Saints (LDS Church) includes the Book of Mormon as a foundational reference. LDS members typically believe the book to be a literal historical record.

Although some earlier unpublished studies had been prepared, not until the early 1970s was true textual criticism applied to the Book of Mormon. At that time BYU Professor Ellis Rasmussen and his associates were asked by the LDS Church to begin preparation for a new edition of the Holy Scriptures. One aspect of that effort entailed digitizing the text and preparing appropriate footnotes; another aspect required establishing the most dependable text. To that latter end, Stanley R. Larson (a Rasmussen graduate student) set about applying modern text critical standards to the manuscripts and early editions of the Book of Mormon as his thesis project—which he completed in 1974. To that end, Larson carefully examined the Original Manuscript (the one dictated by Joseph Smith to his scribes) and the Printer's Manuscript (the copy Oliver Cowdery prepared for the Printer in 1829–1830), and compared them with the first, second, and third editions of the Book of Mormon to determine what sort of changes had occurred over time and to make judgments as to which readings were the most original. Larson proceeded to publish a useful set of well-argued articles on the phenomena which he had discovered. Many of his observations were included as improvements in the 1981 LDS edition of the Book of Mormon.

By 1979, with the establishment of the Foundation for Ancient Research and Mormon Studies (FARMS) as a California non-profit research institution, an effort led by Robert F. Smith began to take full account of Larson's work and to publish a Critical Text of the Book of Mormon. Thus was born the FARMS Critical Text Project which published the first volume of the 3-volume Book of Mormon Critical Text in 1984. The third volume of that first edition was published in 1987, but was already being superseded by a second, revised edition of the entire work, greatly aided through the advice and assistance of then Yale doctoral candidate Grant Hardy, Dr. Gordon C. Thomasson, Professor John W. Welch (the head of FARMS), Professor Royal Skousen, and others too numerous to mention here. However, these were merely preliminary steps to a far more exacting and all-encompassing project.

In 1988, with that preliminary phase of the project completed, Professor Skousen took over as editor and head of the FARMS Critical Text of the Book of Mormon Project and proceeded to gather still scattered fragments of the Original Manuscript of the Book of Mormon and to have advanced photographic techniques applied to obtain fine readings from otherwise unreadable pages and fragments. He also closely examined the Printer's Manuscript (owned by the Community of Christ—RLDS Church in Independence, Missouri) for differences in types of ink or pencil, in order to determine when and by whom they were made. He also collated the various editions of the Book of Mormon down to the present to see what sorts of changes have been made through time.

Thus far, Professor Skousen has published complete transcripts of the Original and Printer's Manuscripts, as well as a six-volume analysis of textual variants. Still in preparation are a history of the text, and a complete electronic collation of editions and manuscripts (volumes 3 and 5 of the Project, respectively). Yale University has in the meantime published an edition of the Book of Mormon which incorporates all aspects of Skousen's research.

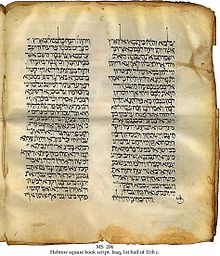

Hebrew Bible

Textual criticism of the Hebrew Bible compares manuscript versions of the following sources (dates refer to the oldest extant manuscripts in each family):

| Manuscript | Examples | Language | Date of Composition | Oldest Copy |

|---|---|---|---|---|

| Dead Sea Scrolls | Tanakh at Qumran | Hebrew, Paleo Hebrew and Greek(Septuagint) | c. 150 BCE – 70 CE | c. 150 BCE – 70 CE |

| Septuagint | Codex Vaticanus, Codex Sinaiticus and other earlier papyri | Greek | 300–100 BCE | 2nd century BCE(fragments) 4th century CE(complete) |

| Peshitta | Codex Ambrosianus B.21 | Syriac | early 5th century CE | |

| Vulgate | Quedlinburg Itala fragment, Codex Complutensis I | Latin | early 5th century CE | |

| Masoretic | Aleppo Codex, Leningrad Codex and other incomplete mss | Hebrew | ca. 100 CE | 10th century CE |

| Samaritan Pentateuch | Abisha Scroll of Nablus | Hebrew in Samaritan alphabet | 200–100 BCE | Oldest extant mss c.11th century CE, oldest mss available to scholars 16th century CE, only Torah contained |

| Targum | Aramaic | 500–1000 CE | 5th century CE | |

As in the New Testament, changes, corruptions, and erasures have been found, particularly in the Masoretic texts. This is ascribed to the fact that early soferim (scribes) did not treat copy errors in the same manner later on.

There are three separate new editions of the Hebrew Bible currently in development: Biblia Hebraica Quinta, the Hebrew University Bible, and the Oxford Hebrew Bible. Biblia Hebraica Quinta is a diplomatic edition based on the Leningrad Codex. The Hebrew University Bible is also diplomatic, but based on the Aleppo Codex. The Oxford Hebrew Bible is an eclectic edition.

New Testament

Early New Testament texts include more than 5,800 Greek manuscripts, 10,000 Latin manuscripts and 9,300 manuscripts in various other ancient languages (including Syriac, Slavic, Ethiopic and Armenian). The manuscripts contain approximately 300,000 textual variants, most of them involving changes of word order and other comparative trivialities. As according to Wescott and Hort:

With regard to the great bulk of the words of the New Testament, as of most other ancient writings, there is no variation or other ground of doubt, and therefore no room for textual criticism... The proportion of words virtually accepted on all hands as raised above doubt is very great, not less, on a rough computation, than seven eights of the whole. The remaining eighth therefore, formed in great part by changes of order and other comparative trivialities, constitutes the whole area of criticism.

Thus, for over 250 years, New Testament scholars have argued that no textual variant affects any doctrine. Professor D. A. Carson states: "nothing we believe to be doctrinally true, and nothing we are commanded to do, is in any way jeopardized by the variants. This is true for any textual tradition. The interpretation of individual passages may well be called in question; but never is a doctrine affected."

The sheer number of witnesses presents unique difficulties, chiefly in that it makes stemmatics in many cases impossible, because many writers used two or more different manuscripts as sources. Consequently, New Testament textual critics have adopted eclecticism after sorting the witnesses into three major groups, called text-types. As of 2017 the most common division distinguishes:

| Text type | Date | Characteristics | Bible version |

|---|---|---|---|

| The Alexandrian text-type (also called the "Neutral Text" tradition; less frequently, the "Minority Text") |

2nd–4th centuries CE | This family constitutes a group of early and well-regarded texts, including Codex Vaticanus and Codex Sinaiticus. Most representatives of this tradition appear to come from around Alexandria, Egypt and from the Alexandrian Church. It contains readings that are often terse, shorter, somewhat rough, less harmonised, and generally more difficult. The family was once thought to result from a very carefully edited third-century recension, but now is believed to be merely the result of a carefully controlled and supervised process of copying and transmission. It underlies most translations of the New Testament produced since 1900. | NIV, NAB, NABRE, Douay, JB and NJB (albeit, with some reliance on the Byzantine text-type), TNIV, NASB, RSV, ESV, EBR, NWT, LB, ASV, NC, GNB, CSB |

| The Western text-type | 3rd–9th centuries CE | Also a very early tradition, which comes from a wide geographical area stretching from North Africa to Italy and from Gaul to Syria. It occurs in Greek manuscripts and in the Latin translations used by the Western church. It is much less controlled than the Alexandrian family and its witnesses are seen to be more prone to paraphrase and other corruptions. It is sometimes called the Caesarean text-type. Some New Testament scholars would argue that the Caesarean constitutes a distinct text-type of its own. | Vetus Latina |

| The Byzantine text-type; also, Koinē text-type (also called "Majority Text") |

5th–16th centuries CE | This group comprises around 95% of all the manuscripts, the majority of which are comparatively very late in the tradition. It had become dominant at Constantinople from the fifth century on and was used throughout the Eastern Orthodox Church in the Byzantine Empire. It contains the most harmonistic readings, paraphrasing and significant additions, most of which are believed to be secondary readings. It underlies the Textus Receptus used for most Reformation-era translations of the New Testament. | Bible translations relying on the Textus Receptus which is close to the Byzantine text: KJV, NKJV, Tyndale, Coverdale, Geneva, Bishops' Bible, OSB |

Quran

Textual criticism of the Quran is a beginning area of study, as Muslims have historically disapproved of higher criticism being applied to the Quran. In some countries textual criticism can be seen as apostasy.

Muslims consider the original Arabic text to be the final revelation, revealed to Muhammad from AD 610 to his death in 632. In Islamic tradition, the Quran was memorised and written down by Muhammad's companions and copied as needed.

The Quran is believed to have had some oral tradition of passing down at some point. Differences that affected the meaning were noted, and around AD 650 Uthman began a process of standardization, presumably to rid the Quran of these differences. Uthman's standardization did not eliminate the textual variants.

In the 1970s, 14,000 fragments of Quran were discovered in the Great Mosque of Sana'a, the Sana'a manuscripts. About 12,000 fragments belonged to 926 copies of the Quran, the other 2,000 were loose fragments. The oldest known copy of the Quran so far belongs to this collection: it dates to the end of the seventh to eighth centuries.

The German scholar Gerd R. Puin has been investigating these Quran fragments for years. His research team made 35,000 microfilm photographs of the manuscripts, which he dated to early part of the eighth century. Puin has not published the entirety of his work, but noted unconventional verse orderings, minor textual variations, and rare styles of orthography. He also suggested that some of the parchments were palimpsests which had been reused. Puin believed that this implied an evolving text as opposed to a fixed one.

In an article in the 1999 Atlantic Monthly, Gerd Puin is quoted as saying that:

My idea is that the Koran is a kind of cocktail of texts that were not all understood even at the time of Muhammad. Many of them may even be a hundred years older than Islam itself. Even within the Islamic traditions there is a huge body of contradictory information, including a significant Christian substrate; one can derive a whole Islamic anti-history from them if one wants.

The Koran claims for itself that it is "mubeen", or "clear", but if you look at it, you will notice that every fifth sentence or so simply doesn't make sense. Many Muslims—and Orientalists—will tell you otherwise, of course, but the fact is that a fifth of the Koranic text is just incomprehensible. This is what has caused the traditional anxiety regarding translation. If the Koran is not comprehensible—if it can't even be understood in Arabic—then it's not translatable. People fear that. And since the Koran claims repeatedly to be clear but obviously is not—as even speakers of Arabic will tell you—there is a contradiction. Something else must be going on.

Canadian Islamic scholar, Andrew Rippin has likewise stated:

The impact of the Yemeni manuscripts is still to be felt. Their variant readings and verse orders are all very significant. Everybody agrees on that. These manuscripts say that the early history of the Koranic text is much more of an open question than many have suspected: the text was less stable, and therefore had less authority, than has always been claimed.

For these reasons, some scholars, especially those who are associated with the Revisionist school of Islamic studies, have proposed that the traditional account of the Quran's composition needs to be discarded and a new perspective on the Quran is needed. Puin, comparing Quranic studies with Biblical studies, has stated:

So many Muslims have this belief that everything between the two covers of the Koran is just God's unaltered word. They like to quote the textual work that shows that the Bible has a history and did not fall straight out of the sky, but until now the Koran has been out of this discussion. The only way to break through this wall is to prove that the Koran has a history too. The Sana'a fragments will help us to do this.

In 2015, some of the earliest known Quranic fragments, containing 62 out of 6236 verses of the Quran and with proposed dating from between approximately AD 568 and 645, were identified at the University of Birmingham. David Thomas, Professor of Christianity and Islam, commented:

These portions must have been in a form that is very close to the form of the Koran read today, supporting the view that the text has undergone little or no alteration and that it can be dated to a point very close to the time it was believed to be revealed.

David Thomas pointed out that the radiocarbon testing found the death date of the animal whose skin made up the Quran, not the date when the Quran was written. Since blank parchment was often stored for years after being produced, he said the Quran could have been written as late as 650–655, during the Quranic codification under Uthman.

Marijn van Putten, who has published work on idiosyncratic orthography common to all early manuscripts of the Uthmanic text type has stated and demonstrated with examples that due to a number of these same idiosyncratic spellings present in the Birmingham fragment (Mingana 1572a + Arabe 328c), it is "clearly a descendant of the Uthmanic text type" and that it is "impossible" that it is a pre-Uthmanic copy, despite its early radiocarbon dating.

Talmud

Textual criticism of the Talmud has a long pre-history but has become a separate discipline from Talmudic study only recently. Much of the research is in Hebrew and German language periodicals.

Classical texts

Textual criticism originated in the classical era and its development in modern times began with classics scholars, in an effort to determine the original content of texts like Plato's Republic. There are far fewer witnesses to classical texts than to the Bible, so scholars can use stemmatics and, in some cases, copy text editing. However, unlike the New Testament where the earliest witnesses are within 200 years of the original, the earliest existing manuscripts of most classical texts were written about a millennium after their composition. All things being equal, textual scholars expect that a larger time gap between an original and a manuscript means more changes in the text.

Legal protection

Scientific and critical editions can be protected by copyright as works of authorship if enough creativity/originality is provided. The mere addition of a word, or substitution of a term with another one believed to be more correct, usually does not achieve such level of originality/creativity. All the notes accounting for the analysis and why and how such changes have been made represent a different work autonomously copyrightable if the other requirements are satisfied. In the European Union critical and scientific editions may be protected also by the relevant neighboring right that protects critical and scientific publications of public domain works as made possible by art. 5 of the Copyright Term Directive. Not all EU member States have transposed art. 5 into national law.

Digital textual scholarship

Digital textual criticism is a relatively new branch of textual criticism working with digital tools to establish a critical edition. The development of digital editing tools has allowed editors to transcribe, archive and process documents much faster than before. Some scholars claim digital editing has radically changed the nature of textual criticism; but others believe the editing process has remained fundamentally the same, and digital tools have simply made aspects of it more efficient.

History

From its beginnings, digital scholarly editing involved developing a system for displaying both a newly "typeset" text and a history of variations in the text under review. Until about halfway through the first decade of the twenty-first century, digital archives relied almost entirely on manual transcriptions of texts. Notable exceptions are the earliest digital scholarly editions published in Budapest in the 1990s. These editions contained high resolution images next to the diplomatic transcription of the texts, as well as a newly typeset text with annotations. These old websites are still available at their original location. Over the course of the early twenty-first century, image files became much faster and cheaper, and storage space and upload times ceased to be significant issues. The next step in digital scholarly editing was the wholesale introduction of images of historical texts, particularly high-definition images of manuscripts, formerly offered only in samples.

Methods

In view of the need to represent historical texts primarily through transcription, and because transcriptions required encoding for every aspect of text that could not be recorded by a single keystroke on the QWERTY keyboard, encoding was invented. Text Encoding Initiative (TEI) uses encoding for the same purpose, although its particulars were designed for scholarly uses in order to offer some hope that scholarly work on digital texts had a good chance of migrating from aging operating systems and/or digital platforms to new ones, and the hope that standardization would lead to easy interchange of data among different projects.

Software

Several computer programs and standards exist to support the work of the editors of critical editions. These include

- The Text Encoding Initiative. The Guidelines of the TEI provide much detailed analysis of the procedures of critical editing, including recommendations about how to mark up a computer file containing a text with critical apparatus. See especially the following chapters of the Guidelines: 10. Manuscript Description, 11. Representation of Primary Sources, and 12. Critical Apparatus.

- Juxta is an open-source tool for comparing and collating multiple witnesses to a single textual work. It was designed to aid scholars and editors examine the history of a text from manuscript to print versions. Juxta provides collation for multiple versions of texts that are marked up in plain text or TEI/XML format.

- The EDMAC macro package for Plain TeX is a set of macros originally developed by John Lavagnino and Dominik Wujastyk for typesetting critical editions. "EDMAC" stands for "EDition" "MACros." EDMAC is in maintenance mode.

- The ledmac package is a development of EDMAC by Peter R. Wilson for typesetting critical editions with LaTeX. ledmac is in maintenance mode.

- The eledmac package is a further development of ledmac by Maïeul Rouquette that adds more sophisticated features and solves more advanced problems. eledmac was forked from ledmac when it became clear that it needed to develop in ways that would compromise backward-compatibility. eledmac is maintenance mode.

- The reledmac package is a further development of eledmac by Maïeul Rouquette that rewrittes many part of the code in order to allow more robust developments in the future. In 2015, it is in active development.

- ednotes, written by Christian Tapp and Uwe Lück is another package for typesetting critical editions using LaTeX.

- Classical Text Editor is a word-processor for critical editions, commentaries and parallel texts written by Stefan Hagel. CTE is designed for use on the Windows operating system, but has been successfully run on Linux and OS/X using Wine. CTE can export files in TEI format. CTE is currently (2014) in active development.

- Critical Edition Typesetter by Bernt Karasch is a system for typesetting critical editions starting from input into a word-processor, and ending up with typesetting with TeX and EDMAC. Development of CET seems to have stopped in 2004.

Critical editions of religious texts (selection)

- Book of Mormon

- Book of Mormon Critical Text – FARMS 2nd edition

- Hebrew Bible and Old Testament

- Complutensian polyglot (based on now-lost manuscripts)

- Septuaginta – Rahlfs' 2nd edition

- Gottingen Septuagint (Vetus Testamentum Graecum: Auctoritate Academiae Scientiarum Gottingensis editum): in progress

- Biblia Hebraica Stuttgartensia – 4th edition

- Hebrew Bible: A Critical Edition – an ongoing product which is designed to be different from Biblia Hebraica by producing an eclectic text

- New Testament

- Editio octava critica maior – Tischendorf edition

- The Greek New Testament According to the Majority Text – Hodges & Farstad edition

- The New Testament in the Original Greek – Westcott & Hort edition

- Novum Testamentum Graece Nestle-Aland 28th edition (NA28)

- United Bible Society's Greek New Testament UBS 4th edition (UBS4)

- Novum Testamentum Graece et Latine – Merk edition

- Editio Critica Maior – German Bible Society edition

- Critical translations

- The Comprehensive New Testament – standardized Nestle-Aland 27 edition

- The Dead Sea Scrolls Bible – with textual mapping to Masoretic, Dead Sea Scrolls, and Septuagint variants

- New English Translation of the Septuagint, a critical translation from the completed parts of the Göttingen Septuagint, with the remainder from Rahlf's manual edition

See also

General

- Authority (textual criticism)

- Close reading

- Diplomatics

- Hermeneutics

- Kaozheng (Chinese textual criticism)

- List of manuscripts

- Palaeography

- Source criticism

Bible

- An Historical Account of Two Notable Corruptions of Scripture

- Bible version debate

- Biblical gloss

- Biblical manuscript

- Categories of New Testament manuscripts

- John 21

- List of Biblical commentaries

- List of major textual variants in the New Testament

- List of New Testament papyri

- List of New Testament uncials

- List of New Testament verses not included in modern English translations

- Mark 16

- Modern English Bible translations

- Jesus and the woman taken in adultery (Pericope Adulteræ)

- Wiseman hypothesis, dating the book of Genesis

-

Aland, Kurt, Aland, Barbara (1987). The Text of the New Testament. Brill. ISBN 90-04-08367-7.

{{cite book}}: CS1 maint: multiple names: authors list (link) - Aland, Barbara (1994). New Testament Textual Criticism, Exegesis and Church History. Peeters Publishers. ISBN 90-390-0105-7.

- Bentham, George, Gosse, Edmund. The Variorum and Definitive Edition of the Poetical and Prose Writings of Edward Fitzgerald, (1902), Doubleday, Page and Co.

- Bowers, Fredson (1964). "Some Principles for Scholarly Editions of Nineteenth-Century American Authors". Studies in Bibliography. 17: 223–228. Retrieved 2006-06-04.

- Bowers, Fredson (1972). "Multiple Authority: New Problems and Concepts of Copy-Text". Library. Fifth Series. XXVII (2): 81–115. doi:10.1093/library/s5-XXVII.2.81.

- Bradley, Sculley, Leaves of Grass: A Textual Variorum of the Printed Poems, (1980), NYU Press, ISBN 0-8147-9444-0

- Comfort, Philip Wesley (2005). Encountering the Manuscripts: An Introduction to New Testament Paleography & Textual Criticism. B&H Publishing Group. ISBN 0-8054-3145-4.

- Davis, Tom (1977). "The CEAA and Modern Textual Editing". Library. Fifth Series. XXXII (32): 61–74. doi:10.1093/library/s5-XXXII.1.61.

- Ehrman, Bart D. (2005). Misquoting Jesus: The Story Behind Who Changed the Bible and Why. Harper Collins. ISBN 978-0-06-073817-4.

- Ehrman, Bart D. (2006). Whose Word Is It?. Continuum International Publishing Group. ISBN 0-8264-9129-4.

- Gaskell, Philip (1978). From Writer to Reader: Studies in Editorial Method. Oxford: Oxford University Press. ISBN 0-19-818171-X.

- Greetham, D. C. (1999). Theories of the text. Oxford [Oxfordshire]: Oxford University Press. ISBN 0-19-811993-3.

- Greg, W. W. (1950). "The Rationale of Copy-Text". Studies in Bibliography. 3: 19–36. Retrieved 2006-06-04.

- Habib, Rafey (2005). A history of literary criticism: from Plato to the present. Cambridge, MA: Blackwell Pub. ISBN 0-631-23200-1.

- Hartin, Patrick J., Petzer J. H., Manning, Bruce. Text and Interpretation: New Approaches in the Criticism of the New Testament. (1991), BRILL, ISBN 90-04-09401-6

- Jarvis, Simon, Scholars and Gentlemen: Shakespearian Textual Criticism and Representations of Scholarly Labour, 1725–1765, Oxford University Press, 1995, ISBN 0-19-818295-3

- Klijn, Albertus Frederik Johannes, An Introduction to the New Testament (1980), p. 14, BRILL, ISBN 90-04-06263-7

- Maas, Paul (1958). Textual Criticism. Oxford University Press. ISBN 0-19-814318-4.

- McCarter, Peter Kyle Jr (1986). Textual criticism: recovering the text of the Hebrew Bible. Philadelphia, PA: Fortress Press. ISBN 0-8006-0471-7.

- McGann, Jerome J. (1992). A critique of modern textual criticism. Charlottesville: University Press of Virginia. ISBN 0-8139-1418-3.

- McKerrow, R. B. (1939). Prolegomena for the Oxford Shakespeare. Oxford: Clarendon Press.

- Montgomery, William Rhadamanthus; Wells, Stanley W.; Taylor, Gary; Jowett, John (1997). William Shakespeare: A Textual Companion. New York: W. W. Norton & Company. ISBN 0-393-31667-X.

- Parker, D.C. (2008). An Introduction to the New Testament Manuscripts and Their Texts. Cambridge: Cambridge University Press. ISBN 978-0-521-71989-6.

- von Reenen, Pieter; Margot van Mulken, eds. (1996). Studies in Stemmatology. Amsterdam: John Benjamins Publishing Company.

- Rosemann, Philipp (1999). Understanding scholastic thought with Foucault. New York: St. Martin's Press. p. 73. ISBN 0-312-21713-7.

- Schuh, Randall T. (2000). Biological systematics: principles and applications. Ithaca, N.Y: Cornell University Press. ISBN 0-8014-3675-3.

- Shillingsburg, Peter (1989). "An Inquiry into the Social Status of Texts and Modes of Textual Criticism". Studies in Bibliography. 42: 55–78. Archived from the original on 2013-09-12. Retrieved 2006-06-07.

- Tanselle, G. Thomas (1972). "Some Principles for Editorial Apparatus". Studies in Bibliography. 25: 41–88. Retrieved 2006-06-04.

- Tanselle, G. Thomas (1975). "Greg's Theory of Copy-Text and the Editing of American Literature". Studies in Bibliography. 28: 167–230. Retrieved 2006-06-04.

- Tanselle, G. Thomas (1976). "The Editorial Problem of Final Authorial Intention". Studies in Bibliography. 29: 167–211. Retrieved 2006-06-04.

- Tanselle, G. Thomas (1981). "Recent Editorial Discussion and the Central Questions of Editing". Studies in Bibliography. 34: 23–65. Retrieved 2007-09-07.

- Tanselle, G. Thomas (1986). "Historicism and Critical Editing". Studies in Bibliography. 39: 1–46. Retrieved 2006-06-04.

- Tanselle, G. Thomas (1995). "The Varieties of Scholarly Editing". In D. C. Greetham (ed.). Scholarly Editing: A Guide to Research. New York: The Modern Language Association of America.

- Tenney, Merrill C. (1985). Dunnett, Walter M. (ed.). New Testament survey. Grand Rapids, MI: W.B. Eerdmans Pub. Co. ISBN 0-8028-3611-9.

- Tov, Emanuel (2001). Textual criticism of the Hebrew Bible. Minneapolis: Fortress. ISBN 90-232-3715-3.

-

Van Mulken, Margot; Van Reenen, Pieter Th van. (1996). Studies in Stemmatology. John Benjamins Publishing Co. ISBN 90-272-2153-7.

{{cite book}}: CS1 maint: multiple names: authors list (link) - Vincent, Marvin Richardson (1899). A History of the Textual Criticism of the New Testament. Macmillan. Original from Harvard University. ISBN 0-8370-5641-1.

- Wegner, Paul (2006). A Student's Guide to Textual Criticism of the Bible. InterVarsity Press. ISBN 0-8308-2731-5.

- Wilson, N. R. p.; Reynolds, L. (1974). Scribes and scholars: a guide to the transmission of Greek and Latin literature. Oxford: Clarendon Press. p. 186. ISBN 0-19-814371-0.

- Zeller, Hans (1975). "A New Approach to the Critical Constitution of Literary Texts". Studies in Bibliography. 28: 231–264. Archived from the original on 2013-09-12. Retrieved 2006-06-07.

Further reading

- Epp, Eldon J., The Eclectic Method in New Testament Textual Criticism: Solution or Symptom?, The Harvard Theological Review, Vol. 69, No. 3/4 (July–October 1976), pp. 211–257

- Housman, A. E. (1922). "The Application of Thought to Textual Criticism". Proceedings of the Classical Association. 18: 67–84. Retrieved 2008-03-08.

- Love, Harold (1993). "section III". Scribal Publication in Seventeenth-Century England. Oxford: Clarendon Press. ISBN 0-19-811219-X.

- Soulen, Richard N. and Soulen, R. Kendall, Handbook of Biblical Criticism; Westminster John Knox Press; 3 edition (October 2001), ISBN 0-664-22314-1

External links

General

- An example of cladistics applied to textual criticism

- Stemma and Stemmatics

- Stemmatics and Information Theory

- Computer-assisted stemmatology challenge & benchmark data-sets

- Searching for the Better Text: How errors crept into the Bible and what can be done to correct them Biblical Archaeology Review

- The European Society for Textual Scholarship.

- Society for Textual Scholarship.

- Walter Burley, Commentarium in Aristotelis De Anima L.III Critical Edition by Mario Tonelotto : an example of critical edition from 4 different manuscripts (transcription from medieval paleography).

Bible

- Manuscript Comparator — allows two or more New Testament manuscript editions to be compared in side by side and unified views (similar to diff output)

- A detailed discussion of the textual variants in the Gospels (covering about 1200 variants on 2000 pages)

- A complete list of all New Testament Papyri with link to images

- An Electronic Edition of The Gospel According to John in the Byzantine Tradition

- New Testament Manuscripts (listing of the manuscript evidence for more than 11,000 variants in the New Testament)

- Library of latest modern books of biblical studies and biblical criticism

- An Online Textual Commentary of the Greek New Testament - transcription of more than 60 ancient manuscripts of the New Testament with a textual commentary and an exhaustive critical apparatus.

- Herbermann, Charles, ed. (1913). "Lower Criticism" . Catholic Encyclopedia. New York: Robert Appleton Company.

|

Hebrew Bible/ Old Testament (protocanon) |

|||||||||

|---|---|---|---|---|---|---|---|---|---|

|

Deuterocanon apocrypha |

|

||||||||

| New Testament | |||||||||

| Subdivisions | |||||||||

| Development | |||||||||

| Manuscripts | |||||||||

| Related | |||||||||