Voltmeter

A voltmeter is an instrument used for measuring electric potential difference between two points in an electric circuit. It is connected in parallel. It usually has a high resistance so that it takes negligible current from the circuit.

Analog voltmeters move a pointer across a scale in proportion to the voltage measured and can be built from a galvanometer and series resistor. Meters using amplifiers can measure tiny voltages of microvolts or less. Digital voltmeters give a numerical display of voltage by use of an analog-to-digital converter.

Voltmeters are made in a wide range of styles, some separately powered (e.g. by battery), and others powered by the measured voltage source itself. Instruments permanently mounted in a panel are used to monitor generators or other fixed apparatus. Portable instruments, usually equipped to also measure current and resistance in the form of a multimeter, are standard test instruments used in electrical and electronics work. Any measurement that can be converted to a voltage can be displayed on a meter that is suitably calibrated; for example, pressure, temperature, flow or level in a chemical process plant.

General-purpose analog voltmeters may have an accuracy of a few percent of full scale and are used with voltages from a fraction of a volt to several thousand volts. Digital meters can be made with high accuracy, typically better than 1%. Specially calibrated test instruments have higher accuracies, with laboratory instruments capable of measuring to accuracies of a few parts per million. Part of the problem of making an accurate voltmeter is that of calibration to check its accuracy. In laboratories, the Weston cell is used as a standard voltage for precision work. Precision voltage references are available based on electronic circuits.

Schematic symbol

In circuit diagrams, a voltmeter is represented by the letter V in a circle, with two emerging lines representing the two points of measurement.

Analog voltmeter

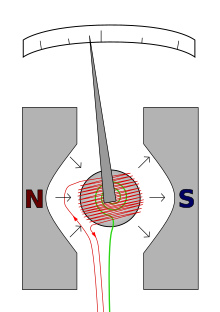

- The red wire carries the current to be measured.

- The restoring spring is shown in green.

- N and S are the north and south poles of the magnet.

A moving coil galvanometer can be used as a voltmeter by inserting a resistor in series with the instrument. The galvanometer has a coil of fine wire suspended in a strong magnetic field. When an electric current is applied, the interaction of the magnetic field of the coil and of the stationary magnet creates a torque, tending to make the coil rotate. The torque is proportional to the current through the coil. The coil rotates, compressing a spring that opposes the rotation. The deflection of the coil is thus proportional to the current, which in turn is proportional to the applied voltage, which is indicated by a pointer on a scale.

One of the design objectives of the instrument is to disturb the circuit as little as possible and so the instrument should draw a minimum of current to operate. This is achieved by using a sensitive galvanometer in series with a high resistance, and then the entire instrument is connected in parallel with the circuit examined.

The sensitivity of such a meter can be expressed as "ohms per volt", the number of ohms resistance in the meter circuit divided by the full scale measured value. For example, a meter with a sensitivity of 1000 ohms per volt would draw 1 milliampere at full scale voltage; if the full scale was 200 volts, the resistance at the instrument's terminals would be 200000 ohms and at full scale, the meter would draw 1 milliampere from the circuit under test. For multi-range instruments, the input resistance varies as the instrument is switched to different ranges.

Moving-coil instruments with a permanent-magnet field respond only to direct current. Measurement of AC voltage requires a rectifier in the circuit so that the coil deflects in only one direction. Some moving-coil instruments are also made with the zero position in the middle of the scale instead of at one end; these are useful if the voltage reverses its polarity.

Voltmeters operating on the electrostatic principle use the mutual repulsion between two charged plates to deflect a pointer attached to a spring. Meters of this type draw negligible current but are sensitive to voltages over about 100 volts and work with either alternating or direct current.

Amplified voltmeter

The sensitivity and input resistance of a voltmeter can be increased if the current required to deflect the meter pointer is supplied by an amplifier and power supply instead of by the circuit under test. The electronic amplifier between input and meter gives two benefits; a rugged moving coil instrument can be used, since its sensitivity need not be high, and the input resistance can be made high, reducing the current drawn from the circuit under test. Amplified voltmeters often have an input resistance of 1, 10, or 20 megohms which is independent of the range selected. A once-popular form of this instrument used a vacuum tube in the amplifier circuit and so was called the vacuum tube voltmeter (VTVM). These were almost always powered by the local AC line current and so were not particularly portable. Today these circuits use a solid-state amplifier using field-effect transistors, hence FET-VM, and appear in handheld digital multimeters as well as in bench and laboratory instruments. These largely replaced non-amplified multimeters except in the least expensive price ranges.

Most VTVMs and FET-VMs handle DC voltage, AC voltage, and resistance measurements; modern FET-VMs add current measurements and often other functions as well. A specialized form of the VTVM or FET-VM is the AC voltmeter. These instruments are optimized for measuring AC voltage. They have much wider bandwidth and better sensitivity than a typical multifunction device.

Digital voltmeter

A digital voltmeter (DVM) measures an unknown input voltage by converting the voltage to a digital value and then displays the voltage in numeric form. DVMs are usually designed around a special type of analog-to-digital converter called an integrating converter.

DVM measurement accuracy is affected by many factors, including temperature, input impedance, and DVM power supply voltage variations. Less expensive DVMs often have input resistance on the order of 10 MΩ. Precision DVMs can have input resistances of 1 GΩ or higher for the lower voltage ranges (e.g. less than 20 V). To ensure that a DVM's accuracy is within the manufacturer's specified tolerances, it must be periodically calibrated against a voltage standard such as the Weston cell.

The first digital voltmeter was invented and produced by Andrew Kay of Non-Linear Systems (and later founder of Kaypro) in 1954.

Simple AC voltmeters use a rectifier connected to a DC measurement circuit, which responds to the average value of the waveform. The meter can be calibrated to display the root mean square value of the waveform, assuming a fixed relation between the average value of the rectified waveform and the RMS value. If the waveform departs significantly from the sinewave assumed in the calibration, the meter will be inaccurate, though for simple wave shapes the reading can be corrected by multiplying by a constant factor. Early "true RMS" circuits used a thermal converter that responded only to the RMS value of the waveform. Modern instruments calculate the RMS value by electronically calculating the square of the input value, taking the average, and then calculating the square root of the value. This allows accurate RMS measurements for a variety of waveforms.

See also

- Ammeter

- Class of accuracy in electrical measurements

- Electrical measurements

- Electrometer

- Electronic test equipment

- Metrology

- Multimeter

- Ohmmeter

- Potentiometer (measuring instrument)

- Solenoid voltmeter

- Voltage divider

- Measurement category

External links

- DC Metering Circuits chapter from Lessons In Electric Circuits Vol 1 DC free ebook and Lessons In Electric Circuits series.

| Authority control: National |

|---|