Culture in music cognition

Culture in music cognition refers to the impact that a person's culture has on their music cognition, including their preferences, emotion recognition, and musical memory. Musical preferences are biased toward culturally familiar musical traditions beginning in infancy, and adults' classification of the emotion of a musical piece depends on both culturally specific and universal structural features. Additionally, individuals' musical memory abilities are greater for culturally familiar music than for culturally unfamiliar music. The sum of these effects makes culture a powerful influence in music cognition.

Effect of culture

Culturally bound preferences and familiarity for music begin in infancy and continue through adolescence and adulthood. People tend to prefer and remember music from their own cultural tradition.

Familiarity for culturally regular meter styles is already in place for young infants of only a few months' age. The looking times of 4- to 8-month old Western infants indicate that they prefer Western meter in music, while Turkish infants of the same age prefer both Turkish and Western meters (Western meters not being completely unfamiliar in Turkish culture). Both groups preferred either meter when compared with arbitrary meter.

In addition to influencing preference for meter, culture affects people's ability to correctly identify music styles. Adolescents from Singapore and the UK rated familiarity and preference for excerpts of Chinese, Malay, and Indian music styles. Neither group demonstrated a preference for the Indian music samples, although the Singaporean teenagers recognized them. Participants from Singapore showed higher preference for and ability to recognize the Chinese and Malay samples; UK participants showed little preference or recognition for any of the music samples, as those types of music are not present in their native culture.

Effect of musical experience

An individual's musical experience may affect how they formulate preferences for music from their own culture and other cultures. American and Japanese individuals (non-music majors) both indicated preference for Western music, but Japanese individuals were more receptive to Eastern music. Among the participants, there was one group with little musical experience and one group that had received supplemental musical experience in their lifetimes. Although both American and Japanese participants disliked formal Eastern styles of music and preferred Western styles of music, participants with greater musical experience showed a wider range of preference responses not specific to their own culture.

Dual cultures

Bimusicalism is a phenomenon in which people well-versed and familiar with music from two different cultures exhibit dual sensitivity to both genres of music. In a study conducted with participants familiar with Western, Indian, and both Western and Indian music, the bimusical participants (exposed to both Indian and Western styles) showed no bias for either music style in recognition tasks and did not indicate that one style of music was more tense than the other. In contrast, the Western and Indian participants more successfully recognized music from their own culture and felt the other culture's music was more tense on the whole. These results indicate that everyday exposure to music from both cultures can result in cognitive sensitivity to music styles from those cultures.

Bilingualism typically confers specific preferences for the language of lyrics in a song. When monolingual (English-speaking) and bilingual (Spanish- and English-speaking) sixth graders listened to the same song played in an instrumental, English, or Spanish version, ratings of preference showed that bilingual students preferred the Spanish version, while monolingual students more often preferred the instrumental version; the children's self-reported distraction was the same for all excerpts. Spanish (bilingual) speakers also identified most closely with the Spanish song. Thus, the language of lyrics interacts with a listener's culture and language abilities to affect preferences.

Emotion recognition

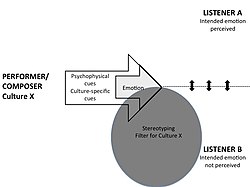

The cue-redundancy model of emotion recognition in music differentiates between universal, structural auditory cues and culturally bound, learned auditory cues (see schematic below).

Psychophysical cues

Structural cues that span all musical traditions include dimensions such as pace (tempo), loudness, and timbre. Fast tempo, for example, is typically associated with happiness, regardless of a listener's cultural background.

Culturally bound cues

Culture-specific cues rely on knowledge of the conventions in a particular musical tradition. Ethnomusicologists have said that there are certain situations in which a certain song would be sung in different cultures. These times are marked by cultural cues and by the people of that culture. A particular timbre may be interpreted to reflect one emotion by Western listeners and another emotion by Eastern listeners. There could be other culturally bound cues as well, for example, rock n' roll music is usually identified to be a rebellious type of music associated with teenagers and the music reflects their ideals and beliefs that their culture believes.

Cue-redundancy model

According to the cue-redundancy model, individuals exposed to music from their own cultural tradition utilize both psychophysical and culturally bound cues in identifying emotionality. Conversely, perception of intended emotion in unfamiliar music relies solely on universal, psychophysical properties.Japanese listeners accurately categorize angry, joyful, and happy musical excerpts from familiar traditions (Japanese and Western samples) and relatively unfamiliar traditions (Hindustani). Simple, fast melodies receive joyful ratings from these participants; simple, slow samples receive sad ratings, and loud, complex excerpts are perceived as angry. Strong relationships between emotional judgments and structural acoustic cues suggest the importance of universal musical properties in categorizing unfamiliar music.

When both Korean and American participants judged the intended emotion of Korean folksongs, the American group's identification of happy and sad songs was equivalent to levels observed for Korean listeners. Surprisingly, Americans exhibited greater accuracy in anger assessments than the Korean group. The latter result implies cultural differences in anger perception occur independently of familiarity, while the similarity of American and Korean happy and sad judgments indicates the role of universal auditory cues in emotional perception.

Categorization of unfamiliar music varies with intended emotion. Timbre mediates Western listeners' recognition of angry and peaceful Hindustani songs.Flute timbre supports the detection of peace, whereas string timbre aids anger identification. Happy and sad assessments, on the other hand, rely primarily on relatively "low-level" structural information such as tempo. Both low-level cues (e.g., slow tempo) and timbre aid in the detection of peaceful music, but only timbre cued anger recognition. Communication of peace, therefore, takes place at multiple structural levels, while anger seems to be conveyed nearly exclusively by timbre. Similarities between aggressive vocalizations and angry music (e.g., roughness) may contribute to the salience of timbre in anger assessments.

Stereotype theory of emotion in music

The stereotype theory of emotion in music (STEM) suggests that cultural stereotyping may affect emotion perceived in music. STEM argues that for some listeners with low expertise, emotion perception in music is based on stereotyped associations held by the listener about the encoding culture of the music (i.e., the culture representative of a particular music genre, such as Brazilian culture encoded in Bossa Nova music). STEM is an extension of the cue-redundancy model because in addition to arguing for two sources of emotion, some cultural cues can now be specifically explained in terms of stereotyping. Particularly, STEM provides more specific predictions, namely that emotion in music is dependent to some extent on the cultural stereotyping of the music genre being perceived.

Complexity

Because musical complexity is a psychophysical dimension, the cue-redundancy model predicts that complexity is perceived independently of experience. However, South African and Finnish listeners assign different complexity ratings to identical African folk songs. Thus, the cue-redundancy model may be overly simplistic in its distinctions between structural feature detection and cultural learning, at least in the case of complexity.

Repetition

When listening to music from within one's own cultural tradition, repetition plays a key role in emotion judgments. American listeners who hear classical or jazz excerpts multiple times rate the elicited and conveyed emotion of the pieces as higher relative to participants who hear the pieces once.

Methodological limitations

Methodological limitations of previous studies preclude a complete understanding of the roles of psychophysical cues in emotion recognition. Divergent mode and tone cues elicit "mixed affect", demonstrating the potential for mixed emotional percepts. Use of dichotomous scales (e.g., simple happy/sad ratings) may mask this phenomenon, as these tasks require participants to report a single component of a multidimensional affective experience.

Memory

Enculturation is a powerful influence on music memory. Both long-term and working memory systems are critically involved in the appreciation and comprehension of music. Long-term memory enables the listener to develop musical expectation based on previous experience while working memory is necessary to relate pitches to one another in a phrase, between phrases, and throughout a piece.

Neuroscience

Neuroscientific evidence suggests that memory for music is, at least in part, special and distinct from other forms of memory. The neural processes of music memory retrieval share much with the neural processes of verbal memory retrieval, as indicated by functional magnetic resonance imaging studies comparing the brain areas activated during each task. Both musical and verbal memory retrieval activate the left inferior frontal cortex, which is thought to be involved in executive function, especially executive function of verbal retrieval, and the posterior middle temporal cortex, which is thought to be involved in semantic retrieval. However, musical semantic retrieval also bilaterally activates the superior temporal gyri containing the primary auditory cortex.

Effect of culture

Memory for music

Despite the universality of music, enculturation has a pronounced effect on individuals' memory for music. Evidence suggests that people develop their cognitive understanding of music from their cultures. People are best at recognizing and remembering music in the style of their native culture, and their music recognition and memory is better for music from familiar but nonnative cultures than it is for music from unfamiliar cultures. Part of the difficulty in remembering culturally unfamiliar music may arise from the use of different neural processes when listening to familiar and unfamiliar music. For instance, brain areas involved in attention, including the right angular gyrus and middle frontal gyrus, show increased activity when listening to culturally unfamiliar music compared to novel but culturally familiar music.

Development

Enculturation affects music memory in early childhood before a child's cognitive schemata for music is fully formed, perhaps beginning at as early as one year of age. Like adults, children are also better able to remember novel music from their native culture than from unfamiliar ones, although they are less capable than adults at remembering more complex music.

Children's developing music cognition may be influenced by the language of their native culture. For instance, children in English-speaking cultures develop the ability to identify pitches from familiar songs at 9 or 10 years old, while Japanese children develop the same ability at age 5 or 6. This difference may be due to the Japanese language's use of pitch accents, which encourages better pitch discrimination at an early age, rather than the stress accents upon which English relies.

Musical expectations

Enculturation also biases listeners' expectations such that they expect to hear tones that correspond to culturally familiar modal traditions. For example, Western participants presented with a series of pitches followed by a test tone not present in the original series were more likely to mistakenly indicate that the test tone was originally present if the tone was derived from a Western scale than if it was derived from a culturally unfamiliar scale. Recent research indicates that deviations from expectations in music may prompt out-group derogation.

Limits of enculturation

Despite the powerful effects of music enculturation, evidence indicates that cognitive comprehension of and affinity for different cultural modalities is somewhat plastic. One long-term instance of plasticity is bimusicalism, a musical phenomenon akin to bilingualism. Bimusical individuals frequently listen to music from two cultures and do not demonstrate the biases in recognition memory and perceptions of tension displayed by individuals whose listening experience is limited to one musical tradition.

Other evidence suggests that some changes in music appreciation and comprehension can occur over a short period of time. For instance, after half an hour of passive exposure to original melodies using familiar Western pitches in an unfamiliar musical grammar or harmonic structure (the Bohlen–Pierce scale), Western participants demonstrated increased recognition memory and greater affinity for melodies in this grammar. This suggests that even very brief exposure to unfamiliar music can rapidly affect music perception and memory.

See also

- Cognitive musicology

- Cognitive neuroscience of music

- Embodied music cognition

- Music therapy

- Psychology of music preference