Substitution model

In biology, a substitution model, also called models of DNA sequence evolution, are Markov models that describe changes over evolutionary time. These models describe evolutionary changes in macromolecules (e.g., DNA sequences) represented as sequence of symbols (A, C, G, and T in the case of DNA). Substitution models are used to calculate the likelihood of phylogenetic trees using multiple sequence alignment data. Thus, substitution models are central to maximum likelihood estimation of phylogeny as well as Bayesian inference in phylogeny. Estimates of evolutionary distances (numbers of substitutions that have occurred since a pair of sequences diverged from a common ancestor) are typically calculated using substitution models (evolutionary distances are used input for distance methods such as neighbor joining). Substitution models are also central to phylogenetic invariants because they are necessary to predict site pattern frequencies given a tree topology. Substitution models are also necessary to simulate sequence data for a group of organisms related by a specific tree.

Phylogenetic tree topologies and other parameters

Phylogenetic tree topologies are often the parameter of interest; thus, branch lengths and any other parameters describing the substitution process are often viewed as nuisance parameters. However, biologists are sometimes interested in the other aspects of the model. For example, branch lengths, especially when those branch lengths are combined with information from the fossil record and a model to estimate the timeframe for evolution. Other model parameters have been used to gain insights into various aspects of the process of evolution. The Ka/Ks ratio (also called ω in codon substitution models) is a parameter of interest in many studies. The Ka/Ks ratio can be used to examine the action of natural selection on protein-coding regions, it provides information about the relative rates of nucleotide substitutions that change amino acids (non-synonymous substitutions) to those that do not change the encoded amino acid (synonymous substitutions).

Application to sequence data

Most of the work on substitution models has focused on DNA/RNA and protein sequence evolution. Models of DNA sequence evolution, where the alphabet corresponds to the four nucleotides (A, C, G, and T), are probably the easiest models to understand. DNA models can also be used to examine RNA virus evolution; this reflects the fact that RNA also has a four nucleotide alphabet (A, C, G, and U). However, substitution models can be used for alphabets of any size; the alphabet is the 20 proteinogenic amino acids for proteins and the sense codons (i.e., the 61 codons that encode amino acids in the standard genetic code) for aligned protein-coding gene sequences. In fact, substitution models can be developed for any biological characters that can be encoded using a specific alphabet (e.g., amino acid sequences combined with information about the conformation of those amino acids in three-dimensional protein structures).

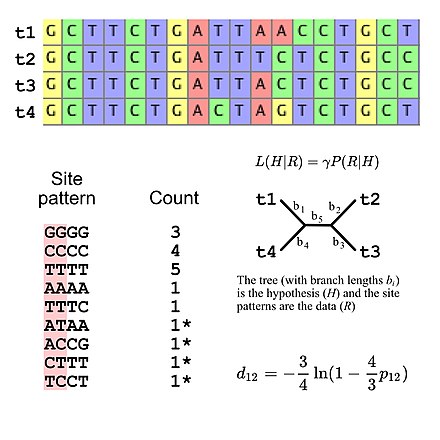

The majority of substitution models used for evolutionary research assume independence among sites (i.e., the probability of observing any specific site pattern is identical regardless of where the site pattern is in the sequence alignment). This simplifies likelihood calculations because it is only necessary to calculate the probability of all site patterns that appear in the alignment then use those values to calculate the overall likelihood of the alignment (e.g., the probability of three "GGGG" site patterns given some model of DNA sequence evolution is simply the probability of a single "GGGG" site pattern raised to the third power). This means that substitution models can be viewed as implying a specific multinomial distribution for site pattern frequencies. If we consider a multiple sequence alignment of four DNA sequences there are 256 possible site patterns so there are 255 degrees of freedom for the site pattern frequencies. However, it is possible to specify the expected site pattern frequencies using five degrees of freedom if using the Jukes-Cantor model of DNA evolution, which is a simple substitution model that allows one to calculate the expected site pattern frequencies only the tree topology and the branch lengths (given four taxa an unrooted bifurcating tree has five branch lengths).

Substitution models also make it possible to simulate sequence data using Monte Carlo methods. Simulated multiple sequence alignments can be used to assess the performance of phylogenetic methods and generate the null distribution for certain statistical tests in the fields of molecular evolution and molecular phylogenetics. Examples of these tests include tests of model fit and the "SOWH test" that can be used to examine tree topologies.

Application to morphological data

The fact that substitution models can be used to analyze any biological alphabet has made it possible to develop models of evolution for phenotypic datasets (e.g., morphological and behavioural traits). Typically, "0" is. used to indicate the absence of a trait and "1" is used to indicate the presence of a trait, although it is also possible to score characters using multiple states. Using this framework, we might encode a set of phenotypes as binary strings (this could be generalized to k-state strings for characters with more than two states) before analyses using an appropriate mode. This can be illustrated using a "toy" example: we can use a binary alphabet to score the following phenotypic traits "has feathers", "lays eggs", "has fur", "is warm-blooded", and "capable of powered flight". In this toy example hummingbirds would have sequence 11011 (most other birds would have the same string), ostriches would have the sequence 11010, cattle (and most other land mammals) would have 00110, and bats would have 00111. The likelihood of a phylogenetic tree can then be calculated using those binary sequences and an appropriate substitution model. The existence of these morphological models make it possible to analyze data matrices with fossil taxa, either using the morphological data alone or a combination of morphological and molecular data (with the latter scored as missing data for the fossil taxa).

There is an obvious similarity between use of molecular or phenotypic data in the field of cladistics and analyses of morphological characters using a substitution model. However, there has been a vociferous debate in the systematics community regarding the question of whether or not cladistic analyses should be viewed as "model-free". The field of cladistics (defined in the strictest sense) favor the use of the maximum parsimony criterion for phylogenetic inference. Many cladists reject the position that maximum parsimony is based on a substitution model and (in many cases) they justify the use of parsimony using the philosophy of Karl Popper. However, the existence of "parsimony-equivalent" models (i.e., substitution models that yield the maximum parsimony tree when used for analyses) makes it possible to view parsimony as a substitution model.

The molecular clock and the units of time

Typically, a branch length of a phylogenetic tree is expressed as the expected number of substitutions per site; if the evolutionary model indicates that each site within an ancestral sequence will typically experience x substitutions by the time it evolves to a particular descendant's sequence then the ancestor and descendant are considered to be separated by branch length x.

Sometimes a branch length is measured in terms of geological years. For example, a fossil record may make it possible to determine the number of years between an ancestral species and a descendant species. Because some species evolve at faster rates than others, these two measures of branch length are not always in direct proportion. The expected number of substitutions per site per year is often indicated with the Greek letter mu (μ).

A model is said to have a strict molecular clock if the expected number of substitutions per year μ is constant regardless of which species' evolution is being examined. An important implication of a strict molecular clock is that the number of expected substitutions between an ancestral species and any of its present-day descendants must be independent of which descendant species is examined.

Note that the assumption of a strict molecular clock is often unrealistic, especially across long periods of evolution. For example, even though rodents are genetically very similar to primates, they have undergone a much higher number of substitutions in the estimated time since divergence in some regions of the genome. This could be due to their shorter generation time, higher metabolic rate, increased population structuring, increased rate of speciation, or smaller body size. When studying ancient events like the Cambrian explosion under a molecular clock assumption, poor concurrence between cladistic and phylogenetic data is often observed. There has been some work on models allowing variable rate of evolution.

Models that can take into account variability of the rate of the molecular clock between different evolutionary lineages in the phylogeny are called “relaxed” in opposition to “strict”. In such models the rate can be assumed to be correlated or not between ancestors and descendants and rate variation among lineages can be drawn from many distributions but usually exponential and lognormal distributions are applied. There is a special case, called “local molecular clock” when a phylogeny is divided into at least two partitions (sets of lineages) and a strict molecular clock is applied in each, but with different rates.

Time-reversible and stationary models

Many useful substitution models are time-reversible; in terms of the mathematics, the model does not care which sequence is the ancestor and which is the descendant so long as all other parameters (such as the number of substitutions per site that is expected between the two sequences) are held constant.

When an analysis of real biological data is performed, there is generally no access to the sequences of ancestral species, only to the present-day species. However, when a model is time-reversible, which species was the ancestral species is irrelevant. Instead, the phylogenetic tree can be rooted using any of the species, re-rooted later based on new knowledge, or left unrooted. This is because there is no 'special' species, all species will eventually derive from one another with the same probability.

A model is time reversible if and only if it satisfies the property (the notation is explained below)

or, equivalently, the detailed balance property,

for every i, j, and t.

Time-reversibility should not be confused with stationarity. A model is stationary if Q does not change with time. The analysis below assumes a stationary model.

The mathematics of substitution models

Stationary, neutral, independent, finite sites models (assuming a constant rate of evolution) have two parameters, π, an equilibrium vector of base (or character) frequencies and a rate matrix, Q, which describes the rate at which bases of one type change into bases of another type; element

The equilibrium row vector π must be annihilated by the rate matrix Q:

The transition matrix function is a function from the branch lengths (in some units of time, possibly in substitutions), to a matrix of conditional probabilities. It is denoted

The asymptotic properties of Pij(t) are such that Pij(0) = δij, where δij is the Kronecker delta function. That is, there is no change in base composition between a sequence and itself. At the other extreme,

The transition matrix can be computed from the rate matrix via matrix exponentiation:

where Qn is the matrix Q multiplied by itself enough times to give its nth power.

If Q is diagonalizable, the matrix exponential can be computed directly: let Q = U−1 Λ U be a diagonalization of Q, with

where Λ is a diagonal matrix and where

where the diagonal matrix eΛt is given by

Generalised time reversible

Generalised time reversible (GTR) is the most general neutral, independent, finite-sites, time-reversible model possible. It was first described in a general form by Simon Tavaré in 1986. The GTR model is often called the general time reversible model in publications; it has also been called the REV model.

The GTR parameters for nucleotides consist of an equilibrium base frequency vector,

Because the model must be time reversible and must approach the equilibrium nucleotide (base) frequencies at long times, each rate below the diagonal equals the reciprocal rate above the diagonal multiplied by the equilibrium ratio of the two bases. As such, the nucleotide GTR requires 6 substitution rate parameters and 4 equilibrium base frequency parameters. Since the 4 frequency parameters must sum to 1, there are only 3 free frequency parameters. The total of 9 free parameters is often further reduced to 8 parameters plus

In general, to compute the number of parameters, you count the number of entries above the diagonal in the matrix, i.e. for n trait values per site

For example, for an amino acid sequence (there are 20 "standard" amino acids that make up proteins), you would find there are 208 parameters. However, when studying coding regions of the genome, it is more common to work with a codon substitution model (a codon is three bases and codes for one amino acid in a protein). There are

An alternative (and commonly used) way to write the instantaneous rate matrix (

The

This notation is easier to understand than the notation originally used by Tavaré, because all model parameters correspond either to "exchangeability" parameters (

Some publications write the nucleotides in a different order (e.g., some authors choose to group two purines together and the two pyrimidines together; see also models of DNA evolution). These difference in notation make it important to be clear regarding the order of the states when writing the

The value of this notation is that instantaneous rate of change from nucleotide

Note that the ordering of the nucleotide subscripts for exchangeability parameters is irrelevant (e.g.,

An arbitrarily chosen exchangeability parameters (e.g.,

The alternative notation also makes it easier to understand the sub-models of the GTR model, which simply correspond to cases where exchangeability and/or equilibrium base frequency parameters are constrained to take on equal values. A number of specific sub-models have been named, largely based on their original publications:

| Model | Exchangeability parameters | Base frequency parameters | Reference |

|---|---|---|---|

| JC69 (or JC) |

|

|

Jukes and Cantor (1969) |

| F81 |

|

all  values free values free

|

Felsenstein (1981) |

| K2P (or K80) |

(transversions), (transversions),  (transitions) (transitions)

|

|

Kimura (1980) |

| HKY85 |

(transversions), (transversions),  (transitions) (transitions)

|

all  values free values free

|

Hasegawa et al. (1985) |

| K3ST (or K81) |

( ( transversions), transversions),  ( ( transversions), transversions),  (transitions) (transitions)

|

|

Kimura (1981) |

| TN93 |

(transversions), (transversions),  ( ( transitions), transitions),  ( ( transitions) transitions)

|

all  values free values free

|

Tamura and Nei (1993) |

| SYM | all exchangeability parameters free |

|

Zharkikh (1994) |

| GTR (or REV) | all exchangeability parameters free | all  values free values free

|

Tavaré (1986) |

There are 203 possible ways that the exchangeability parameters can be restricted to form sub-models of GTR, ranging from the JC69 and F81 models (where all exchangeability parameters are equal) to the SYM model and the full GTR (or REV) model (where all exchangeability parameters are free). The equilibrium base frequencies are typically treated in two different ways: 1) all

The alternative notation also makes it straightforward to see how the GTR model can be applied to biological alphabets with a larger state-space (e.g., amino acids or codons). It is possible to write a set of equilibrium state frequencies as

Mechanistic vs. empirical models

A main difference in evolutionary models is how many parameters are estimated every time for the data set under consideration and how many of them are estimated once on a large data set. Mechanistic models describe all substitutions as a function of a number of parameters which are estimated for every data set analyzed, preferably using maximum likelihood. This has the advantage that the model can be adjusted to the particularities of a specific data set (e.g. different composition biases in DNA). Problems can arise when too many parameters are used, particularly if they can compensate for each other (this can lead to non-identifiability). Then it is often the case that the data set is too small to yield enough information to estimate all parameters accurately.

Empirical models are created by estimating many parameters (typically all entries of the rate matrix as well as the character frequencies, see the GTR model above) from a large data set. These parameters are then fixed and will be reused for every data set. This has the advantage that those parameters can be estimated more accurately. Normally, it is not possible to estimate all entries of the substitution matrix from the current data set only. On the downside, the parameters estimated from the training data might be too generic and therefore have a poor fit to any particular dataset. A potential solution for that problem is to estimate some parameters from the data using maximum likelihood (or some other method). In studies of protein evolution the equilibrium amino acid frequencies

With the large-scale genome sequencing still producing very large amounts of DNA and protein sequences, there is enough data available to create empirical models with any number of parameters, including empirical codon models. Because of the problems mentioned above, the two approaches are often combined, by estimating most of the parameters once on large-scale data, while a few remaining parameters are then adjusted to the data set under consideration. The following sections give an overview of the different approaches taken for DNA, protein or codon-based models.

DNA substitution models

The first models of DNA evolution was proposed Jukes and Cantor in 1969. The Jukes-Cantor (JC or JC69) model assumes equal transition rates as well as equal equilibrium frequencies for all bases and it is the simplest sub-model of the GTR model. In 1980, Motoo Kimura introduced a model with two parameters (K2P or K80): one for the transition and one for the transversion rate. A year later, Kimura introduced a second model (K3ST, K3P, or K81) with three substitution types: one for the transition rate, one for the rate of transversions that conserve the strong/weak properties of nucleotides (

Almost all DNA substitution models are mechanistic models (as described above). The small number of parameters that one needs to estimate for these models makes it feasible to estimate those parameters from the data. It is also necessary because the patterns of DNA sequence evolution often differ among organisms and among genes within organisms. The later may reflect optimization by the action of selection for specific purposes (e.g. fast expression or messenger RNA stability) or it might reflect neutral variation in the patterns of substitution. Thus, depending on the organism and the type of gene, it is likely necessary to adjust the model to these circumstances.

Two-state substitution models

An alternative way to analyze DNA sequence data is to recode the nucleotides as purines (R) and pyrimidines (Y); this practice is often called RY-coding. Insertions and deletions in multiple sequence alignments can also be encoded as binary data and analyzed in using a two-state model.

The simplest two-state model of sequence evolution is called the Cavender-Farris model or the Cavender-Farris-Neyman (CFN) model; the name of this model reflects the fact that it was described independently in several different publications. The CFN model is identical to the Jukes-Cantor model adapted to two states and it has even been implemented as the "JC2" model in the popular IQ-TREE software package (using this model in IQ-TREE requires coding the data as 0 and 1 rather than R and Y; the popular PAUP* software package can interpret a data matrix comprising only R and Y as data to be analyzed using the CFN model). It is also straightforward to analyze binary data using the phylogenetic Hadamard transform. The alternative two-state model allows the equilibrium frequency parameters of R and Y (or 0 and 1) to take on values other than 0.5 by adding single free parameter; this model is variously called CFu or GTR2 (in IQ-TREE).

Amino acid substitution models

For many analyses, particularly for longer evolutionary distances, the evolution is modeled on the amino acid level. Since not all DNA substitution also alter the encoded amino acid, information is lost when looking at amino acids instead of nucleotide bases. However, several advantages speak in favor of using the amino acid information: DNA is much more inclined to show compositional bias than amino acids, not all positions in the DNA evolve at the same speed (non-synonymous mutations are less likely to become fixed in the population than synonymous ones), but probably most important, because of those fast evolving positions and the limited alphabet size (only four possible states), the DNA suffers from more back substitutions, making it difficult to accurately estimate evolutionary longer distances.

Unlike the DNA models, amino acid models traditionally are empirical models. They were pioneered in the 1960s and 1970s by Dayhoff and co-workers by estimating replacement rates from protein alignments with at least 85% identity (originally with very limited data and ultimately culminating in the Dayhoff PAM model of 1978). This minimized the chances of observing multiple substitutions at a site. From the estimated rate matrix, a series of replacement probability matrices were derived, known under names such as PAM250. Log-odds matrices based on the Dayhoff PAM model were commonly used to assess the significance of homology search results, although the BLOSUM matrices have superseded the PAM log-odds matrices in this context because the BLOSUM matrices appear to be more sensitive across a variety of evolutionary distances, unlike the PAM log-odds matrices.

The Dayhoff PAM matrix was the source of the exchangeability parameters used in one of the first maximum-likelihood analyses of phylogeny that used protein data and the PAM model (or an improved version of the PAM model called DCMut) continues to be used in phylogenetics. However, the limited number of alignments used to generate the PAM model (reflecting the limited amount of sequence data available in the 1970s) almost certainly inflated the variance of some rate matrix parameters (alternatively, the proteins used to generate the PAM model could have been a non-representative set). Regardless, it is clear that the PAM model seldom has as good of a fit to most datasets as more modern empirical models (Keane et al. 2006 tested thousands of vertebrate, bacterial, and archaeal proteins and they found that the Dayhoff PAM model had the best-fit to at most <4% of the proteins).

Starting in the 1990s, the rapid expansion of sequence databases due to improved sequencing technologies led to the estimation of many new empirical matrices (see for a complete list). The earliest efforts used methods similar to those used by Dayhoff, using large-scale matching of the protein database to generate a new log-odds matrix and the JTT (Jones-Taylor-Thornton) model. The rapid increases in compute power during this time (reflecting factors such as Moore's law) made it feasible to estimate parameters for empirical models using maximum likelihood (e.g., the WAG and LG models) and other methods (e.g., the VT and PMB models). The IQ-Tree software package allows users to infer their own time reversible model using QMaker, or non-time-reversible using nQMaker.

Another set of substitution models of protein evolution directly incorporate information from the protein structure and provide a more realistic modeling in terms of likelihood and biological meaning.

The no common mechanism (NCM) model and maximum parsimony

In 1997, Tuffley and Steel described a model that they named the no common mechanism (NCM) model. The topology of the maximum likelihood tree for a specific dataset given the NCM model is identical to the topology of the optimal tree for the same data given the maximum parsimony criterion. The NCM model assumes all of the data (e.g., homologous nucleotides, amino acids, or morphological characters) are related by a common phylogenetic tree. Then